Kenny Eliason on Unsplash"} />

### A Primer on `@defer`

Angular's [`@defer`](https://angular.dev/guide/templates/defer) allows developers to delay when a component will be initialized within a component's template, until a [certain condition is met](https://angular.dev/guide/templates/defer#controlling-deferred-content-loading-with-triggers). This could be, when the content enters the viewport, or a user interacts with a specific element, e.t.c.

In our case, we utilize [Angular Material Tabs](https://material.angular.dev/components/tabs/overview) with two tabs displaying two pieces of data:

```html

```

Our `` component may contain data that we don't want to load immediately until we click on that tab. We can wrap it within a `@defer` block to only initialize the component when it enters the viewport:

```html

@defer(on viewport) {

}

```

### Referencing content within `@defer` blocks

If we would like to reference our ``component that contains a property `photoCount()` (`GalleryViewComponent.photoCount`) and use that reference within the same template, we cannot directly use a [template reference variable](https://angular.dev/guide/templates/variables#template-reference-variables) as such:

```html twoslash

@if(tabIndex === 1) {

{{ galleryViewRef.photoCount() }} photos

// [!code focus]

// @error: Will throw error: Property 'photoCount' does not exist on type 'undefined'.

}

@defer(on viewport) {

// [!code focus]

}

```

The above `galleryViewRef.photoCount()` will be undefined.

To properly reference the `` component, we'll utilize the [`viewChild`](https://angular.dev/guide/components/queries#view-queries) in the component's typescript file:

```ts

@Component({

template: `

@if(tabIndex === 1) {

{{ galleryComponent()?.photoCount() }} photos

}

@defer(on viewport) {

}

`

})

export class HomeComponent {

galleryComponent = viewChild('galleryViewRef');

tabIndex = 0;

}

```

Since `viewChild` keeps its result up to date as the application state changes, the variable `galleryComponent` is initially undefined until it inters the viewport.

If a component is wrapped in an `@if` block, it will have the same behavior where a template reference will not have a value. Thus, we'll need to also utilize `viewChild` to reference the content within the `@if` block.

import { Callout } from 'vocs/components'

import { CoverPhoto } from '../../../components/CoverPhoto'

import { AuthorAndDate } from '../../../components/AuthorAndDate'

## The gap between man and machine

Eddie Pipocas on Unsplash"} />

Customer research (my definition):

The skill of asking questions in a search for understanding of user behavior in order make a product that work for the users as well as to discover ways to differenciate the product from the pack.

Being a builder, there's always this singular focus on the thing that I am working on - from how creative/elegant the solutions are to how much of the latest and greatest tools I have used in solutionizing.

And I take some of pride in the development process. Research and design, on the other hand, requires a bit of detatchment from beginning with solutions and instead having a focus on the user and their problems.

It can seem humbling at first to view the user as in fact more knowlegeable than me, a builder, in order to empathize with them and understand their needs. However, as I discover that there's a lot to learn from talking to users, I find that this position quickly becomes empowering. Because I don't have to come up with the answers or a version of a solution that I think will work for a user. Instead, while really listening to a user, the answers lie in plain sight.

As a developer, a lot of job disatisfaction comes from the detachment of the product from the user as I become more enamored by the tools and not see what impact the product is having. A lot of designers (and design researchers), on the other hand, take the time to work with people and are focussed on bridging the gaps in their knowledge.

And this shows at my work where a lot of user-centered people choose to do design and research as well as perform product managerial roles while the developers (builders) want to play with toys and keep building more and more until they outdo each other. While designers tend to the needs of the user, and advocate for them, developers sit in front of their screens pumping out lines of code like our lives depend on it - literally and figuratively.

One of the most important metric working as a developer is the technical impact on a project (or multiple).

This measure inherently pits developers on a scale from most to least impactful, rewarding those who are the most skillful, creative, fast and efficient.

In other words, being able to build the biggest thing is what a developer is meant to do in their career.

Bridging that gap between man and machine is a necessary pursuit, as idealistic as it may be.

Most of the time, we will fail, or get distracted by wanting to use the machine so badly that we will try to adjust ourselves to fit the machines instead of building ones that work for us.

This work requires both good design in all its aspects and development skill to execute the designs.

*Perhaps this is where the gap is.*

import { Callout } from 'vocs/components'

import { CoverPhoto } from '../../../components/CoverPhoto'

import { AuthorAndDate } from '../../../components/AuthorAndDate'

export const {author, authorLink, publishedOn} = frontmatter;

## Utilizing Angular's `provideAppInitializer` to load a module federation remote during application initialization

Takashi Miyazaki on Unsplash"} />

### TLDR:

In a micro-frontend setup with Angular and module federation, we can intercept Angular's bootstraping process to execute code from a remote application before the host application fully initializes using [`provideAppInitializer`](https://angular.dev/api/core/provideAppInitializer).

### Problem Context

Our team works on a micro-frontend Angular application that sits across multiple environments that includes testing, staging and production environments.

These environments all have different environment variables such as api endpoints, cdn urls, e.t.c.

No secrets are stored in these environment files because they are all shipped to the client side.

We utilize NX for our micro-frontend setup which makes the setup easier to manage. The NX docs provides [very good documentation on getting started with NX, Angular and Module Federation.](https://nx.dev/docs/technologies/angular/guides/dynamic-module-federation-with-angular)

During a deployment of our microfrontend to each of the environments, the pipeline swaps out the values in the compiled `environment.ts` file with the appropriate values for each of these environments.

The microfrontend (in our case called `remote-app`) [exposes](https://module-federation.io/configure/exposes.html) two remotes in the `module-federation.config.ts` file:

1. `remoteRoutes` - routes that configure the child routing logic

2. `environment` - the environment file that contains environment variables

Think of a remote as a javascript file that contains a piece of code to be "fetched" by another application.

```ts twoslash

import {ModuleFederationConfig} from '@nx/module-federation';

// ---cut---

const moduleFederationConfig: ModuleFederationConfig = {

name: 'remote-app',

exposes: {

'./remoteRoutes': 'apps/remote-app/src/app/routes.ts',

'./environment': 'apps/remote-app/src/environments/environment.ts',

},

};

```

These remotes can then be fetched from the host application by using the `loadRemote` module federation function from `@module-federation/enhanced/runtime`. i.e.:

```ts twoslash

// [!include ~/snippets/2026/initializing-env-variables-in-ng-mf.ts]

// bootstrap.ts in the host application

import { bootstrapApplication } from '@angular/platform-browser';

import { provideRouter } from '@angular/router';

import { loadRemote } from '@module-federation/enhanced/runtime';

// ---cut---

bootstrapApplication(AppComponent, {

providers: [

provideRouter([

{ path: '', component: HomeComponent },

// @noErrors

/* This loads the remote routes to the applications router such that going to /remoteUrl loads the routes from the microfrontend */

{ path: 'remoteUrl', loadChildren: () => loadRemote('remote-app/remoteRoutes').then(m => m.remoteRoutes)} // [!code focus]

]),

// other providers...

]

})

```

The set up would look like this figuratively:

This environment file contains mostly environment variables specific to each environment.

These values need to be available at run time.

An example of an environment file would be:

```ts twoslash

export const environment = {

apiUrl: 'https://api-dev.our-app.com/v1.0/',

cdnUrl: 'https://cdn-dev.our-app.com/',

appInsightsUrl: 'https://app-insights-dev.our-app.com/',

} as const

```

This environment file for our microfrontend is hosted on the remote url and so the host application does not know the values at the point of initialization.

The challenge then was how to load this environment file and make it available to the host application when the application starts up (at initialization).

### Utilizing `provideAppInitializer`

Angular provides a way to run async during the boostraping phase through the `provideAppInitializer` that is [provided at the application startup phase](https://angular.dev/api/core/provideAppInitializer).

If an async process is to be run, we can pass this function to be executed in the bootstrap phase and angular will not complete initialization until this async logic completes - an observable completes or a promise resolve.

We would first need to modify how we pass in our environment variables and load them into a service. As well, we would need to define a function to run in the `provideAppInitializer` callback. For our example, we will name our function `initialize()` (but you can choose to call your function something different such as `init` or `setup`):

```ts twoslash

// [!include ~/snippets/2026/app-env-service.ts:appenvservice]

```

Notice here that our environment service contains a function that returns a promise.

This is the function that we would pass into the `provideAppInitializer` function:

***

In the `bootstrap.ts` file for our host application, we can then initialize our `AppEnvService` to set up environment variables:

```ts twoslash

// [!include ~/snippets/2026/initializing-env-variables-in-ng-mf.ts]

// [!include ~/snippets/2026/app-env-service.ts]

import { bootstrapApplication } from '@angular/platform-browser';

import { provideRouter } from '@angular/router';

import { loadRemote } from '@module-federation/enhanced/runtime';

import { provideAppInitializer } from '@angular/core'

// ---cut---

bootstrapApplication(AppComponent, {

providers: [

// Inject the AppEnvironment and call the initialize function

provideAppInitializer(() => inject(AppEnvService).initialize()), // [!code focus]

provideRouter([

{ path: '', component: HomeComponent },

// @noErrors

{ path: 'remoteUrl', loadChildren: () => loadRemote('remote-app/remoteRoutes').then(m => m.routes)}

]),

// other providers...

]

})

```

During the application startup phase, Angular will run this async `AppEnvService.initialize()` function, fetch our environment variables and populate them into our `AppEnvService`.

### Epilogue

The main reason for implementing module federation was because two teams working on various parts of the application wanted to deploy independently.

This is not what micro-frontends were designed for as our micro-frontend does not operate independently.

I do wonder what the [`loadRemote`](https://module-federation.io/guide/basic/runtime/runtime-api#runtime-api) function does specifically under the hood to fetch and load the remote routes, and provides the same angular context as the host application.

Something to investigate further!

## A More Type Safe-ish Angular Router

> Attempting to typing the Angular Router navigation functions (`Router.navigate`; `Router.navigateByUrl`) functions.

### Angular Routing Overview

Routing in Angular is mostly a straight-forward and opinionated when using Angular's build-in routing.

#### Creating Routes

To create routes in Angular, a developer first needs to create an array of type `Array` (`Routes`) and pass the array of routes to the `ApplicationConfig`. The following is a simple routing setup with [static url paths](https://angular.dev/guide/routing/define-routes#static-url-paths)

```ts

import { ApplicationConfig } from '@angular/core'

import { provideRouter, Routes } from '@angular/router'

import { HomeComponent } from '~/components/home/home.ts'

import { DashboardComponent } from '~/components/dashboard/dashboard.ts'

import { AnalyticsComponent } from '~/components/analytics/analytics.ts'

import { ReportsComponent } from '~/components/reports/reports.ts'

import { SettingsComponent } from '~/components/settings/settings.ts'

const routes: Routes = [

{

path: '',

component: HomeComponent,

},

{

path: 'dashboard',

component: DashboardComponent,

children: [

{

path: 'analytics',

component: AnalyticsComponent,

},

{

path: 'reports',

component: ReportsComponent,

},

],

},

{

path: 'settings',

component: SettingsComponent,

},

]

/* Pass Routes to Application Config */

export const appConfig: ApplicationConfig = {

providers: [

//...other providers

provideRouter(routes),

],

}

```

#### Routing Tasks

Within a component, routing can be performed from the component's template or class.

##### Routing from the Component Template

A user might click a link or a button to take them to another page. To be able to perform routing tasks, a developer needs to pass in the `RouterLink` directive into the imports array of the component then use that `routerLink` directive within the template:

```ts

import { RouterLink } from '@angular/router'

@Component({

template: `

`,

imports: [RouterLink, DashboardComponent, SettingsComponent],

})

export class HomeComponent {}

```

##### Routing from within the Component's Class

To perform routing from within a component, a developer needs to inject the `Router` into the component and use either the [`Router.navigate`](https://angular.dev/guide/routing/navigate-to-routes#routernavigate) or the [`Router.navigateByUrl`](https://angular.dev/guide/routing/navigate-to-routes#routernavigatebyurl) functions

The

```ts twoslash

import { Router, ActivatedRoute } from '@angular/router'

import { Component, inject } from '@angular/core'

@Component({ template: '' })

// ---cut---

export class HomeComponent {

router = inject(Router);

activatedRoute = inject(ActivatedRoute);

/* Using Router.navigate */

goToDashboard() {

this.router.navigate(['/dashboard'], {

relativeTo: this.activatedRoute

});

}

/* Using Router.navigateByUrl */

goToSettings() {

this.router.navigateByUrl('/settings');

}

}

```

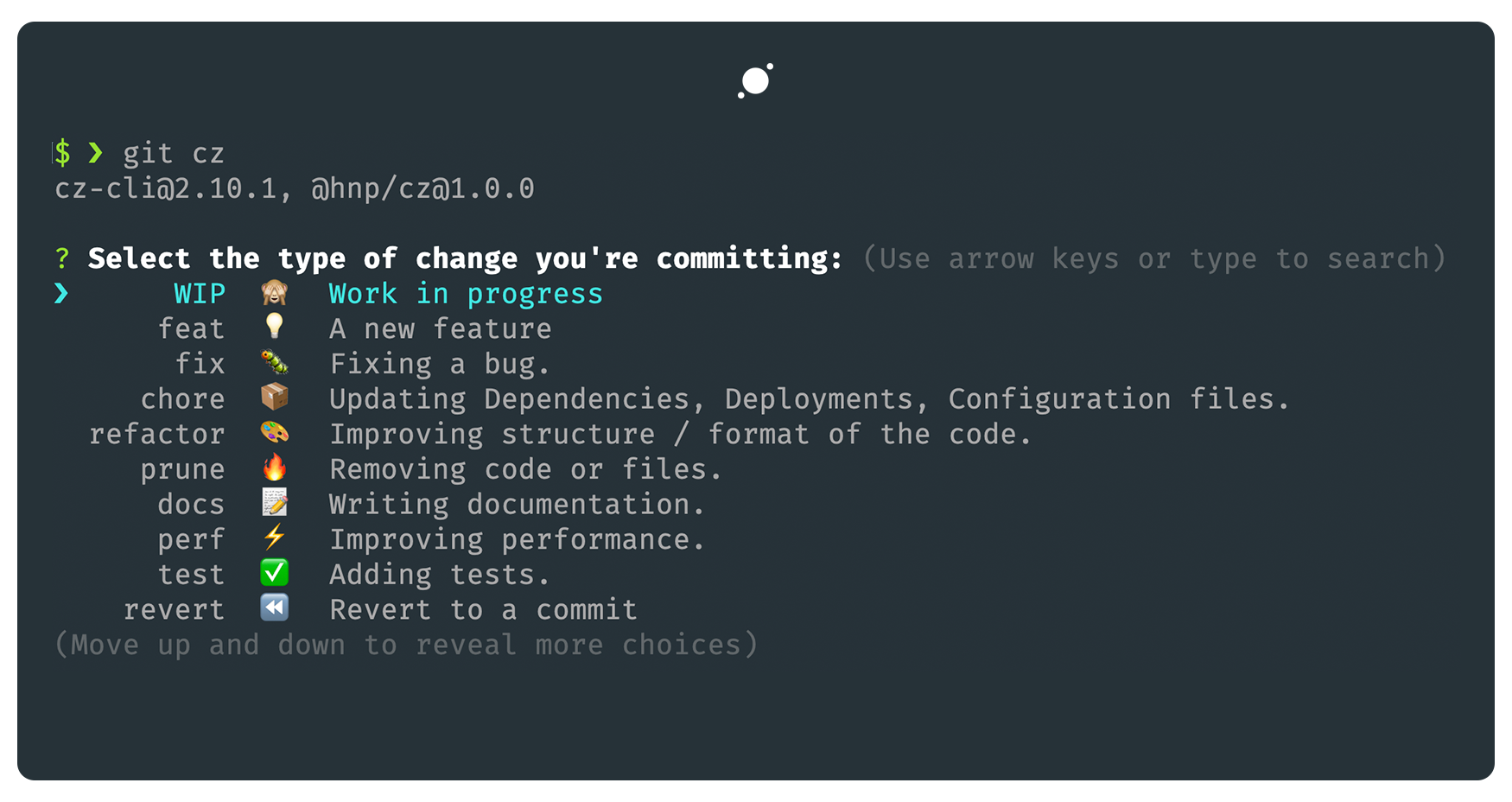

### Motivation: Why Attempt to Add Types

When calling the Router's `.navigate` or `.navigateByUrl` functions, a developer can pass in a string, any string and the TypeScript compiler will not throw an error. When a user attempts to access a route that doesn't exist, a developer can add a catch all path that catches all routes that don't exist:

```ts twoslash

import { Routes } from '@angular/router';

// ---cut---

const routes: Routes = [

{

path: '**',

redirectTo: '/'

}

]

```

The incentive to add more type-safety to the Router is more on developer ergonomics (DX) while they are writing the code so that they can catch invalid routes at the point where they are writing the code, instead of on the browser, or worse still, when the application is deployed.

For a non-existent route, for instance a call to a non-existent route:

```ts

import { Router } from '@angular/router';

import { Component, inject } from '@angular/core';

@Component({template: ''})

export class SomeComponent {

router = inject(Router)

routeToSomewhere() {

this.router.routeByUrl("non-existent-route");

}

}

```

Should throw an error such as:

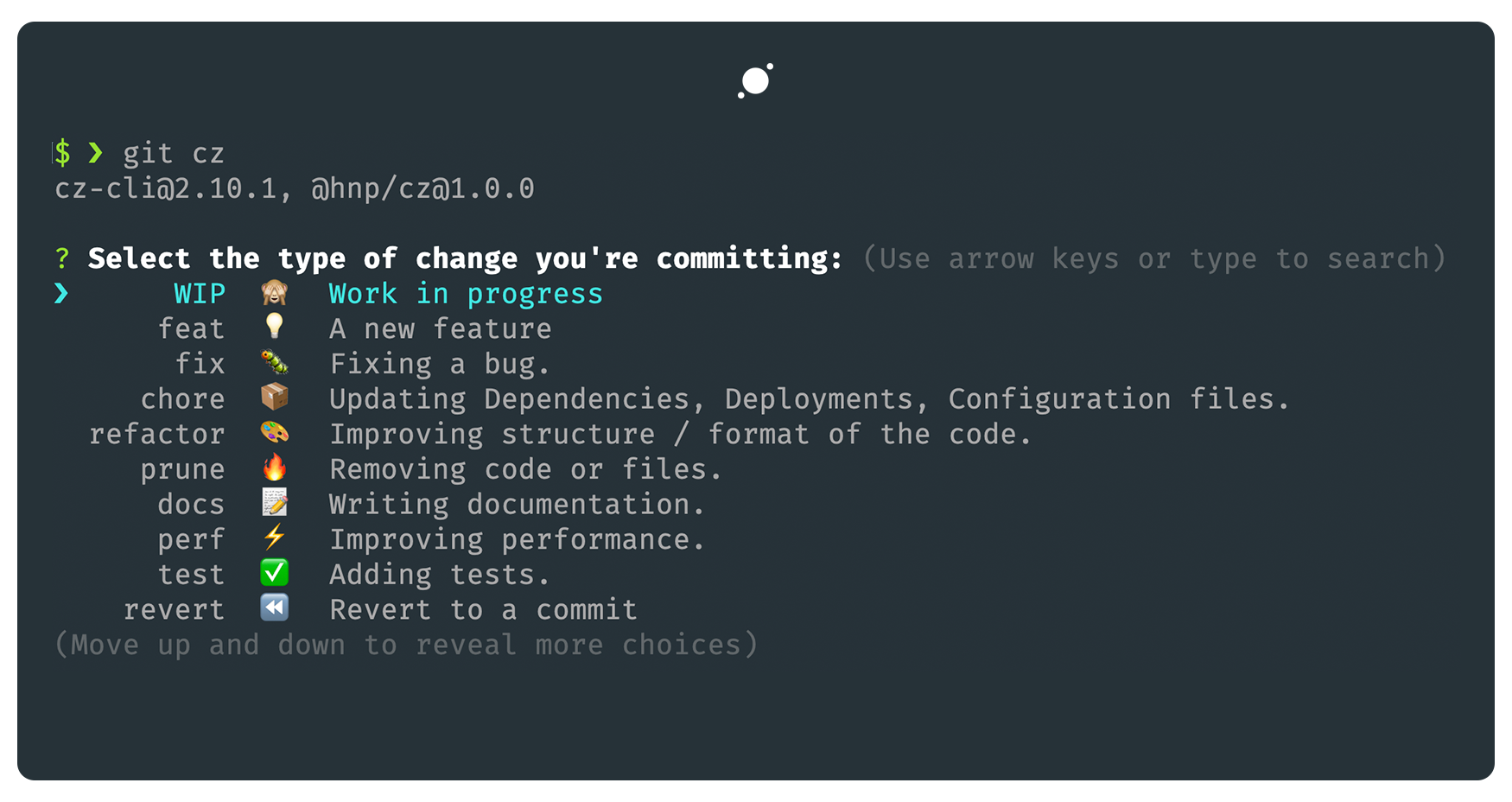

!\[\[Screenshot 2025-08-13 at 9.17.07 AM.png]]

In the example above, the type `TRoutePath` is a union type of valid routes. This would help a developer track down the error faster.

### Building Out the Types from the Routes Array

Once the routes array is configured, we need to parse out the routes to a type such as:

```ts twoslash

type TRoutePath = '' | 'dashboard' | 'dashboard/analytics' | 'dashboard/reports' | 'settings'

```

While a developer can manually write down the paths, there will be two places where a developer will have to remember to update whenever the routes change. This is less ideal but may be simple enough for an application with few routes.

A better approach would be to have the routes be the source of truth and the type be generated from this types array. Our approach for this will be to write out a generic type that will take in the routes.

#### Applying `const` Assertion and the `satisfies` operator

The first thing we need to do is to apply [const assertion](https://www.typescriptlang.org/docs/handbook/release-notes/typescript-3-4.html#const-assertions) to the `routes` array and combine it with the [`satisfies` operator](https://www.typescriptlang.org/docs/handbook/release-notes/typescript-4-9.html#the-satisfies-operator) to ensure that our `routes` fulfill the properties needed `Routes` type array:

```ts twoslash

import { Routes } from '@angular/router'

import { Component } from '@angular/core'

@Component({ template: '' })

class DashboardComponent {}

// ---cut---

const routes = [

{

path: 'dashboard',

component: DashboardComponent

}

//... more routes

] as const satisfies Routes

```

The type of routes can then be simplified as:

```ts

type TRoutes = typeof routes;

```

#### Creating a Generic Function to Extract the types

We need a way to extract the types from the `routes` array. A generic type that would take as input the type `TRoutes` and output our union of valid routes.

##### Drafting out the Generic type

One way to think about the generic type is as a function, that takes as input, a type `Route` (a single route) and as output, loops through the children and returns a combination of that route's the children's paths.

If we think of our generic type as a function, that would look like.

```ts

import { Route } from '@angular/router';

function getRoutePaths(route: T, parentPrefix: P) {

/* The base case is where route has no children */

if (!route.children?.length) {

return [route.path]

}

for (const childRoute of route.children) {

return [

...(childRoute.path ? [`${parentPrefix}${childRoute.path}`, childRoute.path] : []),

...getRoutePaths(childRoute, childRoute.path ?? ''),

]

}

}

```

This helps us then build out our generic type which we will call: `TRoutePaths`. Our type takes as input a route, and loops through all the child routes

```ts twoslash

import { Route } from '@angular/router';

// ---cut---

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

```

When we apply this to our `TRoutes` to extract out the types, then we have:

```ts twoslash

import { Route, Routes } from '@angular/router';

const routes = [

{

path: '',

},

{

path: 'dashboard',

children: [

{

path: 'analytics',

},

{

path: 'reports',

},

],

},

{

path: 'settings',

},

] as const satisfies Routes;

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

// ---cut---

type TRoutes = typeof routes;

type TAppRoutePaths = TRoutePaths

```

The above `TAppRoutePaths` is equivalent to:

```ts twoslash

type TRoutePath = '' | 'dashboard' | 'dashboard/analytics' | 'dashboard/reports' | 'settings'

```

##### Applying our Extracted Type to Angular Router

To extend Angular router functions, we need to extend the `Router.prototype` with two new methods that use our typed routes. We can extend the `@angular/router` module import with our new types using [TypeScript Modules](https://www.typescriptlang.org/docs/handbook/namespaces-and-modules.html#using-modules) and add two new functions `route` and `routeByUrl` that use our `TRoutePath` type.

##### Helper Types

We will need a few helper types to extract out the type of arguments that are passed into the `.navigate` and the `.navigateByUrl` functions:

```ts twoslash

import { Router } from '@angular/router';

// ---cut---

type TRestOfNavigateMoreArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TNavigateReturn = ReturnType

type TRestOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TFirstOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Exclude : never

type TNavigateByUrlReturn = ReturnType

```

##### Extending `Router.navigate` and `Router.navigateByUrl`

We can then extend the `@angular/router` module by declaring the `Router` interface with our new methods `Router.route` and `Router.routeByUrl` . We then extend the `Router.prototype` and pass in the same methods as the implementation.

```ts

declare module '@angular/router' {

interface Router {

route: (commands: readonly TAppRoutePaths, ...args: TRestOfNavigateMoreArgs) => TNavigateReturn

routeByUrl: (

url: TFirstOfNavigateByUrlArgs | TAppRoutePaths,

...args: TRestOfNavigateByUrlArgs

) => TNavigateByUrlReturn

}

}

Router.prototype.route = Router.prototype.navigate

Router.prototype.routeByUrl = Router.prototype.navigateByUrl

```

And there we have it. If we use these `Router.route` and `Router.routeByUrl` functions, we can then use them in our application:

```ts twoslash

import { Router, Route, Routes } from '@angular/router';

import { inject, Component } from '@angular/core';

const routes = [

{

path: '',

},

{

path: 'dashboard',

children: [

{

path: 'analytics',

},

{

path: 'reports',

},

],

},

{

path: 'settings',

},

] as const satisfies Routes;

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

type TRestOfNavigateMoreArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TNavigateReturn = ReturnType

type TRestOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TFirstOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Exclude : never

type TNavigateByUrlReturn = ReturnType

type TAppRoutePaths = TRoutePaths

declare module '@angular/router' {

interface Router {

route: (commands: readonly TAppRoutePaths[], ...args: TRestOfNavigateMoreArgs) => TNavigateReturn

routeByUrl: (

url: TFirstOfNavigateByUrlArgs | TAppRoutePaths,

...args: TRestOfNavigateByUrlArgs

) => TNavigateByUrlReturn

}

}

Router.prototype.route = Router.prototype.navigate

Router.prototype.routeByUrl = Router.prototype.navigateByUrl

// ---cut---

@Component({ template: '' })

export class SomeComponent {

router = inject(Router)

navigateToDashboard() {

this.router.routeByUrl('dashboard')

}

routeToSomewhereNonExistent() {

// @errors: 2345

this.router.routeByUrl("non-existent-route");

}

}

```

We even have auto-complete in our IDE:

```ts twoslash

import { Router, Route, Routes } from '@angular/router';

import { inject, Component } from '@angular/core';

const routes = [

{

path: '',

},

{

path: 'dashboard',

children: [

{

path: 'analytics',

},

{

path: 'reports',

},

],

},

{

path: 'settings',

},

] as const satisfies Routes;

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

type TRestOfNavigateMoreArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TNavigateReturn = ReturnType

type TRestOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TFirstOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Exclude : never

type TNavigateByUrlReturn = ReturnType

type TAppRoutePaths = TRoutePaths

declare module '@angular/router' {

interface Router {

route: (commands: readonly TAppRoutePaths[], ...args: TRestOfNavigateMoreArgs) => TNavigateReturn

routeByUrl: (

url: TFirstOfNavigateByUrlArgs | TAppRoutePaths,

...args: TRestOfNavigateByUrlArgs

) => TNavigateByUrlReturn

}

}

Router.prototype.route = Router.prototype.navigate

Router.prototype.routeByUrl = Router.prototype.navigateByUrl

// ---cut-before---

@Component({template: ''})

export class SomeComponent {

router = inject(Router)

navigateToDashboard() {

// @noErrors

this.router.routeByUrl("

// ^|

}

}

```

We now have type-checking on our paths which was our goal at the beginning.

### More Complex Scenarios

Our Router now handles the basic routing scenarios with static route URLs. However, and **realistically**, most applications pass in route and query parameters. An example would look like:

```ts

const routes: Routes = [

{

path: 'dashboard',

component: DashboardComponent,

children: [

{

path: 'reports',

component: ReportsComponent,

children: [

{

path: ':id',

component: SingleReportComponent,

},

]

},

],

},

// Other routes

]

```

To access the `SingleReportComponent`, we need to pass in the the `id` to the `Router`'s navigation functions. Our implementation so far does not handle the parameters.

### Limitations

Even though most applications have more complex routing requirements, this was an attempt at making the router a bit more type-safe for simple use-cases.

However, there are several limitations of this implementation such as:

1. This implementation relies on the underlying Angular router API. Changes to the function arguments (`Router.navigate` and `Router.navigateByUrl`) can break the types.

2. The above implementation so far only handles routes with static URLs. Since we don't know the shape of the route params beforehand - the parameters can be any string. When we perform a union of this generated type with `string` , the output is a `string`.

## Experimenting and viewing work as a play and Three Lessons from the Year

This past week I merged a pull request containing the last set of features that my team is planning to ship this year. Getting through this chunk of work got me thinking about some of the ideas and patterns that have made my work feel more satisfying this year. A lot of my day to day work involves tinkering with ideas, patterns, and trying out different approaches to solve technical challenges. And when all the pieces come together and we release the work to our users, I am happy to get validation of our teams' efforts throughout the way.

Here are three things that I have applied that have been helpful in making my work this year:

### 1. signing up to experiment and try out new ideas

When the team brings up a challenge to me that I haven't encountered before or don't know how to solve, I never say that it cannot be done or we do not have the skillset for it if I at least have not attempted to do my own research and consulted my colleagues on the topic. At work we call such investigative and open-ended work a spike. I love doing such work because they push me to explore unfamiliar territory. And almost 100% of the time I learn something new that later finds its way into a feature or a way of improving an already-existing implementation. Doing this has delivered the most compounding of learnings over time as I have applied the lessons in work that has come later in the year.

### 2. solve the hardest problem first

Usually when tackling a large-enough project, there's always one or two core technical challenges that make up a big chunk of the work to be done. These usually need more time commitment and tend to present an opportunity for learning something new. Getting to the core problems faster through rapid prototyping has helped me utilize my energy well by solving the most technically challenging problems when I have the most drive and energy. The rest of the work is mostly patching up the solutions to the core problems and cleaning out the rough edges.

### 3. understanding how I work best

For me, short bursts of getting several low-stakes tasks done paired with longer projects where I can focus days and even a week or more on is usually a good balance. For larger projects, I have found that I tend to shut out everything else when working on something and let it simmer and occupy my mind as I make progress. Then I can keep a steady rhythm of high focus until I get the work through the door.

***

These are a few of the main lessons that underlined my work this year and hope to keep building upon.

import { Callout } from 'vocs/components'

import { CoverPhoto } from '../../../components/CoverPhoto'

import { SpotifyTrack } from '../../../components/SpotifyTrack'

## Intermittent Sleep & Rediscovering Learning

There is Gorée where my heart of hearts bleeds,

This environment file contains mostly environment variables specific to each environment.

These values need to be available at run time.

An example of an environment file would be:

```ts twoslash

export const environment = {

apiUrl: 'https://api-dev.our-app.com/v1.0/',

cdnUrl: 'https://cdn-dev.our-app.com/',

appInsightsUrl: 'https://app-insights-dev.our-app.com/',

} as const

```

This environment file for our microfrontend is hosted on the remote url and so the host application does not know the values at the point of initialization.

The challenge then was how to load this environment file and make it available to the host application when the application starts up (at initialization).

### Utilizing `provideAppInitializer`

Angular provides a way to run async during the boostraping phase through the `provideAppInitializer` that is [provided at the application startup phase](https://angular.dev/api/core/provideAppInitializer).

If an async process is to be run, we can pass this function to be executed in the bootstrap phase and angular will not complete initialization until this async logic completes - an observable completes or a promise resolve.

We would first need to modify how we pass in our environment variables and load them into a service. As well, we would need to define a function to run in the `provideAppInitializer` callback. For our example, we will name our function `initialize()` (but you can choose to call your function something different such as `init` or `setup`):

```ts twoslash

// [!include ~/snippets/2026/app-env-service.ts:appenvservice]

```

Notice here that our environment service contains a function that returns a promise.

This is the function that we would pass into the `provideAppInitializer` function:

***

In the `bootstrap.ts` file for our host application, we can then initialize our `AppEnvService` to set up environment variables:

```ts twoslash

// [!include ~/snippets/2026/initializing-env-variables-in-ng-mf.ts]

// [!include ~/snippets/2026/app-env-service.ts]

import { bootstrapApplication } from '@angular/platform-browser';

import { provideRouter } from '@angular/router';

import { loadRemote } from '@module-federation/enhanced/runtime';

import { provideAppInitializer } from '@angular/core'

// ---cut---

bootstrapApplication(AppComponent, {

providers: [

// Inject the AppEnvironment and call the initialize function

provideAppInitializer(() => inject(AppEnvService).initialize()), // [!code focus]

provideRouter([

{ path: '', component: HomeComponent },

// @noErrors

{ path: 'remoteUrl', loadChildren: () => loadRemote('remote-app/remoteRoutes').then(m => m.routes)}

]),

// other providers...

]

})

```

During the application startup phase, Angular will run this async `AppEnvService.initialize()` function, fetch our environment variables and populate them into our `AppEnvService`.

### Epilogue

The main reason for implementing module federation was because two teams working on various parts of the application wanted to deploy independently.

This is not what micro-frontends were designed for as our micro-frontend does not operate independently.

I do wonder what the [`loadRemote`](https://module-federation.io/guide/basic/runtime/runtime-api#runtime-api) function does specifically under the hood to fetch and load the remote routes, and provides the same angular context as the host application.

Something to investigate further!

## A More Type Safe-ish Angular Router

> Attempting to typing the Angular Router navigation functions (`Router.navigate`; `Router.navigateByUrl`) functions.

### Angular Routing Overview

Routing in Angular is mostly a straight-forward and opinionated when using Angular's build-in routing.

#### Creating Routes

To create routes in Angular, a developer first needs to create an array of type `Array` (`Routes`) and pass the array of routes to the `ApplicationConfig`. The following is a simple routing setup with [static url paths](https://angular.dev/guide/routing/define-routes#static-url-paths)

```ts

import { ApplicationConfig } from '@angular/core'

import { provideRouter, Routes } from '@angular/router'

import { HomeComponent } from '~/components/home/home.ts'

import { DashboardComponent } from '~/components/dashboard/dashboard.ts'

import { AnalyticsComponent } from '~/components/analytics/analytics.ts'

import { ReportsComponent } from '~/components/reports/reports.ts'

import { SettingsComponent } from '~/components/settings/settings.ts'

const routes: Routes = [

{

path: '',

component: HomeComponent,

},

{

path: 'dashboard',

component: DashboardComponent,

children: [

{

path: 'analytics',

component: AnalyticsComponent,

},

{

path: 'reports',

component: ReportsComponent,

},

],

},

{

path: 'settings',

component: SettingsComponent,

},

]

/* Pass Routes to Application Config */

export const appConfig: ApplicationConfig = {

providers: [

//...other providers

provideRouter(routes),

],

}

```

#### Routing Tasks

Within a component, routing can be performed from the component's template or class.

##### Routing from the Component Template

A user might click a link or a button to take them to another page. To be able to perform routing tasks, a developer needs to pass in the `RouterLink` directive into the imports array of the component then use that `routerLink` directive within the template:

```ts

import { RouterLink } from '@angular/router'

@Component({

template: `

`,

imports: [RouterLink, DashboardComponent, SettingsComponent],

})

export class HomeComponent {}

```

##### Routing from within the Component's Class

To perform routing from within a component, a developer needs to inject the `Router` into the component and use either the [`Router.navigate`](https://angular.dev/guide/routing/navigate-to-routes#routernavigate) or the [`Router.navigateByUrl`](https://angular.dev/guide/routing/navigate-to-routes#routernavigatebyurl) functions

The

```ts twoslash

import { Router, ActivatedRoute } from '@angular/router'

import { Component, inject } from '@angular/core'

@Component({ template: '' })

// ---cut---

export class HomeComponent {

router = inject(Router);

activatedRoute = inject(ActivatedRoute);

/* Using Router.navigate */

goToDashboard() {

this.router.navigate(['/dashboard'], {

relativeTo: this.activatedRoute

});

}

/* Using Router.navigateByUrl */

goToSettings() {

this.router.navigateByUrl('/settings');

}

}

```

### Motivation: Why Attempt to Add Types

When calling the Router's `.navigate` or `.navigateByUrl` functions, a developer can pass in a string, any string and the TypeScript compiler will not throw an error. When a user attempts to access a route that doesn't exist, a developer can add a catch all path that catches all routes that don't exist:

```ts twoslash

import { Routes } from '@angular/router';

// ---cut---

const routes: Routes = [

{

path: '**',

redirectTo: '/'

}

]

```

The incentive to add more type-safety to the Router is more on developer ergonomics (DX) while they are writing the code so that they can catch invalid routes at the point where they are writing the code, instead of on the browser, or worse still, when the application is deployed.

For a non-existent route, for instance a call to a non-existent route:

```ts

import { Router } from '@angular/router';

import { Component, inject } from '@angular/core';

@Component({template: ''})

export class SomeComponent {

router = inject(Router)

routeToSomewhere() {

this.router.routeByUrl("non-existent-route");

}

}

```

Should throw an error such as:

!\[\[Screenshot 2025-08-13 at 9.17.07 AM.png]]

In the example above, the type `TRoutePath` is a union type of valid routes. This would help a developer track down the error faster.

### Building Out the Types from the Routes Array

Once the routes array is configured, we need to parse out the routes to a type such as:

```ts twoslash

type TRoutePath = '' | 'dashboard' | 'dashboard/analytics' | 'dashboard/reports' | 'settings'

```

While a developer can manually write down the paths, there will be two places where a developer will have to remember to update whenever the routes change. This is less ideal but may be simple enough for an application with few routes.

A better approach would be to have the routes be the source of truth and the type be generated from this types array. Our approach for this will be to write out a generic type that will take in the routes.

#### Applying `const` Assertion and the `satisfies` operator

The first thing we need to do is to apply [const assertion](https://www.typescriptlang.org/docs/handbook/release-notes/typescript-3-4.html#const-assertions) to the `routes` array and combine it with the [`satisfies` operator](https://www.typescriptlang.org/docs/handbook/release-notes/typescript-4-9.html#the-satisfies-operator) to ensure that our `routes` fulfill the properties needed `Routes` type array:

```ts twoslash

import { Routes } from '@angular/router'

import { Component } from '@angular/core'

@Component({ template: '' })

class DashboardComponent {}

// ---cut---

const routes = [

{

path: 'dashboard',

component: DashboardComponent

}

//... more routes

] as const satisfies Routes

```

The type of routes can then be simplified as:

```ts

type TRoutes = typeof routes;

```

#### Creating a Generic Function to Extract the types

We need a way to extract the types from the `routes` array. A generic type that would take as input the type `TRoutes` and output our union of valid routes.

##### Drafting out the Generic type

One way to think about the generic type is as a function, that takes as input, a type `Route` (a single route) and as output, loops through the children and returns a combination of that route's the children's paths.

If we think of our generic type as a function, that would look like.

```ts

import { Route } from '@angular/router';

function getRoutePaths(route: T, parentPrefix: P) {

/* The base case is where route has no children */

if (!route.children?.length) {

return [route.path]

}

for (const childRoute of route.children) {

return [

...(childRoute.path ? [`${parentPrefix}${childRoute.path}`, childRoute.path] : []),

...getRoutePaths(childRoute, childRoute.path ?? ''),

]

}

}

```

This helps us then build out our generic type which we will call: `TRoutePaths`. Our type takes as input a route, and loops through all the child routes

```ts twoslash

import { Route } from '@angular/router';

// ---cut---

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

```

When we apply this to our `TRoutes` to extract out the types, then we have:

```ts twoslash

import { Route, Routes } from '@angular/router';

const routes = [

{

path: '',

},

{

path: 'dashboard',

children: [

{

path: 'analytics',

},

{

path: 'reports',

},

],

},

{

path: 'settings',

},

] as const satisfies Routes;

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

// ---cut---

type TRoutes = typeof routes;

type TAppRoutePaths = TRoutePaths

```

The above `TAppRoutePaths` is equivalent to:

```ts twoslash

type TRoutePath = '' | 'dashboard' | 'dashboard/analytics' | 'dashboard/reports' | 'settings'

```

##### Applying our Extracted Type to Angular Router

To extend Angular router functions, we need to extend the `Router.prototype` with two new methods that use our typed routes. We can extend the `@angular/router` module import with our new types using [TypeScript Modules](https://www.typescriptlang.org/docs/handbook/namespaces-and-modules.html#using-modules) and add two new functions `route` and `routeByUrl` that use our `TRoutePath` type.

##### Helper Types

We will need a few helper types to extract out the type of arguments that are passed into the `.navigate` and the `.navigateByUrl` functions:

```ts twoslash

import { Router } from '@angular/router';

// ---cut---

type TRestOfNavigateMoreArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TNavigateReturn = ReturnType

type TRestOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TFirstOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Exclude : never

type TNavigateByUrlReturn = ReturnType

```

##### Extending `Router.navigate` and `Router.navigateByUrl`

We can then extend the `@angular/router` module by declaring the `Router` interface with our new methods `Router.route` and `Router.routeByUrl` . We then extend the `Router.prototype` and pass in the same methods as the implementation.

```ts

declare module '@angular/router' {

interface Router {

route: (commands: readonly TAppRoutePaths, ...args: TRestOfNavigateMoreArgs) => TNavigateReturn

routeByUrl: (

url: TFirstOfNavigateByUrlArgs | TAppRoutePaths,

...args: TRestOfNavigateByUrlArgs

) => TNavigateByUrlReturn

}

}

Router.prototype.route = Router.prototype.navigate

Router.prototype.routeByUrl = Router.prototype.navigateByUrl

```

And there we have it. If we use these `Router.route` and `Router.routeByUrl` functions, we can then use them in our application:

```ts twoslash

import { Router, Route, Routes } from '@angular/router';

import { inject, Component } from '@angular/core';

const routes = [

{

path: '',

},

{

path: 'dashboard',

children: [

{

path: 'analytics',

},

{

path: 'reports',

},

],

},

{

path: 'settings',

},

] as const satisfies Routes;

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

type TRestOfNavigateMoreArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TNavigateReturn = ReturnType

type TRestOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TFirstOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Exclude : never

type TNavigateByUrlReturn = ReturnType

type TAppRoutePaths = TRoutePaths

declare module '@angular/router' {

interface Router {

route: (commands: readonly TAppRoutePaths[], ...args: TRestOfNavigateMoreArgs) => TNavigateReturn

routeByUrl: (

url: TFirstOfNavigateByUrlArgs | TAppRoutePaths,

...args: TRestOfNavigateByUrlArgs

) => TNavigateByUrlReturn

}

}

Router.prototype.route = Router.prototype.navigate

Router.prototype.routeByUrl = Router.prototype.navigateByUrl

// ---cut---

@Component({ template: '' })

export class SomeComponent {

router = inject(Router)

navigateToDashboard() {

this.router.routeByUrl('dashboard')

}

routeToSomewhereNonExistent() {

// @errors: 2345

this.router.routeByUrl("non-existent-route");

}

}

```

We even have auto-complete in our IDE:

```ts twoslash

import { Router, Route, Routes } from '@angular/router';

import { inject, Component } from '@angular/core';

const routes = [

{

path: '',

},

{

path: 'dashboard',

children: [

{

path: 'analytics',

},

{

path: 'reports',

},

],

},

{

path: 'settings',

},

] as const satisfies Routes;

type TRoutePaths =

/* Loop through each of the children - TDeepChild */

TRoute extends { children: infer ChildRoutes extends Route[] }

? /**

* const childrenPaths = getRoutePaths(route, parentRoutePrefix)

* const childPath = route.path

* return childrenPaths + `${parentPrefixRoute}childPath` + childPath

**/

TRoutePaths | `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: /* base case - return the route path */

TRoute extends { path: string }

? `${ParentPrefix}${TRoute['path']}` | TRoute['path']

: never

type TRestOfNavigateMoreArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TNavigateReturn = ReturnType

type TRestOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Rest : never

type TFirstOfNavigateByUrlArgs = Parameters extends [infer Arg, ...infer Rest] ? Exclude : never

type TNavigateByUrlReturn = ReturnType

type TAppRoutePaths = TRoutePaths

declare module '@angular/router' {

interface Router {

route: (commands: readonly TAppRoutePaths[], ...args: TRestOfNavigateMoreArgs) => TNavigateReturn

routeByUrl: (

url: TFirstOfNavigateByUrlArgs | TAppRoutePaths,

...args: TRestOfNavigateByUrlArgs

) => TNavigateByUrlReturn

}

}

Router.prototype.route = Router.prototype.navigate

Router.prototype.routeByUrl = Router.prototype.navigateByUrl

// ---cut-before---

@Component({template: ''})

export class SomeComponent {

router = inject(Router)

navigateToDashboard() {

// @noErrors

this.router.routeByUrl("

// ^|

}

}

```

We now have type-checking on our paths which was our goal at the beginning.

### More Complex Scenarios

Our Router now handles the basic routing scenarios with static route URLs. However, and **realistically**, most applications pass in route and query parameters. An example would look like:

```ts

const routes: Routes = [

{

path: 'dashboard',

component: DashboardComponent,

children: [

{

path: 'reports',

component: ReportsComponent,

children: [

{

path: ':id',

component: SingleReportComponent,

},

]

},

],

},

// Other routes

]

```

To access the `SingleReportComponent`, we need to pass in the the `id` to the `Router`'s navigation functions. Our implementation so far does not handle the parameters.

### Limitations

Even though most applications have more complex routing requirements, this was an attempt at making the router a bit more type-safe for simple use-cases.

However, there are several limitations of this implementation such as:

1. This implementation relies on the underlying Angular router API. Changes to the function arguments (`Router.navigate` and `Router.navigateByUrl`) can break the types.

2. The above implementation so far only handles routes with static URLs. Since we don't know the shape of the route params beforehand - the parameters can be any string. When we perform a union of this generated type with `string` , the output is a `string`.

## Experimenting and viewing work as a play and Three Lessons from the Year

This past week I merged a pull request containing the last set of features that my team is planning to ship this year. Getting through this chunk of work got me thinking about some of the ideas and patterns that have made my work feel more satisfying this year. A lot of my day to day work involves tinkering with ideas, patterns, and trying out different approaches to solve technical challenges. And when all the pieces come together and we release the work to our users, I am happy to get validation of our teams' efforts throughout the way.

Here are three things that I have applied that have been helpful in making my work this year:

### 1. signing up to experiment and try out new ideas

When the team brings up a challenge to me that I haven't encountered before or don't know how to solve, I never say that it cannot be done or we do not have the skillset for it if I at least have not attempted to do my own research and consulted my colleagues on the topic. At work we call such investigative and open-ended work a spike. I love doing such work because they push me to explore unfamiliar territory. And almost 100% of the time I learn something new that later finds its way into a feature or a way of improving an already-existing implementation. Doing this has delivered the most compounding of learnings over time as I have applied the lessons in work that has come later in the year.

### 2. solve the hardest problem first

Usually when tackling a large-enough project, there's always one or two core technical challenges that make up a big chunk of the work to be done. These usually need more time commitment and tend to present an opportunity for learning something new. Getting to the core problems faster through rapid prototyping has helped me utilize my energy well by solving the most technically challenging problems when I have the most drive and energy. The rest of the work is mostly patching up the solutions to the core problems and cleaning out the rough edges.

### 3. understanding how I work best

For me, short bursts of getting several low-stakes tasks done paired with longer projects where I can focus days and even a week or more on is usually a good balance. For larger projects, I have found that I tend to shut out everything else when working on something and let it simmer and occupy my mind as I make progress. Then I can keep a steady rhythm of high focus until I get the work through the door.

***

These are a few of the main lessons that underlined my work this year and hope to keep building upon.

import { Callout } from 'vocs/components'

import { CoverPhoto } from '../../../components/CoverPhoto'

import { SpotifyTrack } from '../../../components/SpotifyTrack'

## Intermittent Sleep & Rediscovering Learning

There is Gorée where my heart of hearts bleeds,

The house of red basalt brick to the right,

The little red house in the middle, between two gulfs

Of shadow and light.

- From the poem It's Five O'Clock, by Léopold Sedar Senghor

Several times this week sleep evaded me.

And I stared into the darkness.

I’ve been struggling to sleep lately and I’m unsure if it’s because of the change in season or my body is trying to tell me something.

This has been happening for the past couple of weeks and hopefully I’ll be able to find some good sleep rhythms into the fall.

I took up learning C# and DotNet since I feel fairly competent and comfortable with Angular/TS to the point where I wasn’t feeling like I was growing or being pushed into a challenge (at work).

There’s something about trying to push my own boundaries at the workplace where it seems as if I have to be the one who redefine my own and other’s understanding of what I can do.

People know I can do A but I want to do A and B so I have to build a level of confidence in doing the new thing that I am learning.

And I feel myself at times wanting to go back that well-defined understanding of my role (focussing on the UI) — because it is familiar and comfortable.

However, I am allowing myself to change and grow.

So far I have had to do these learnings in my own time and at my own pace and the more I push into this new foray, the more I remind myself that I need to pay attention to how I am learning, and what I am learning about myself and others.

No one will hold my hand (per say) and I find myself having to curve out my own space in the workplace.

#### Learnings over September 🕵🏾♂️

##### Improving List Rendering Performance using Angular Material CDK Virtual Scrolling

Angular Material CDK provides an api for rendering large lists that improves performance when an application has to render many items on a list.

We had a situation where we loaded some data and rendering this with the traditional `ngFor` directive would lead to slow performance and in some cases the entire browser would not respond.

I used [Virtual Scrolling](https://material.angular.io/cdk/scrolling/overview#virtual-scrolling) from Material CDK this to improve an application’s responsiveness and performance drastically.

##### Auxilliary Routes in Angular

I was doing some research on if Angular can handle multiple routers for example, in the case where you have a dialog that you would like to route but have the dialog’s routing be independent from the primary application router.

This is possible through [named routes in Angular](https://v17.angular.io/guide/router-tutorial-toh#displaying-multiple-routes-in-named-outlets).

I might do a more in-depth post on this to explore the use cases and drawbacks.

***

## A Freshman at NgConf 2023

In most of my work and experience builiding applications from my home office, days can morph into weeks of solo-work with the occasional pair programming sessions and routine standup meetings.

To that end, I can admit that I was anxious and very much naive to the craft of attending a developer conference which seemed to me to lie at the center of networking with other developers, learning what's new in the Angular space and meeting speakers and sponsors.

The Angular Framework is advancing and keeping up by walking the fine line of adopting new features and bringing the community along in the process.

For developers new to the framework, Angular is trying to be more approachable by adding new features such as standalone components that make it easier to bootstrap an Angular application.

More experienced developers are also more excited by the push to embrace features that improve parts of the framework such as hydration, reactivity and performance.

Suffice to say, the conference showcased the bleeding-edge of what the Angular team and community are doing to push the framework and the web in general forward.

The turnout fielded developers from small and large teams as well as those who had just began working in Angular to those who have been there from the Angular.js days.

So what did I take out of the conference?

I learned something new about the framework - how to use auxilliary routes in Angular and how to type the `ngTemplateOutletContext` directive.

Speaking during breakfast and lunch with developers working in different contexts with Angular, there was this general feeling of excitement about how the framework continually enabled them to build better applications for their users while improving the developer experience(DX) with each release as the framework is embracing tooling from the community such by:

* integrating tools such as [Vite and esbuild](https://angular.io/guide/esbuild) that speed up local development and the build process

* embracing more modern testing tools by integrating [jest support and migrating away from Karma to Web Test Runner](https://blog.angular.io/moving-angular-cli-to-jest-and-web-test-runner-ef85ef69ceca) as well as having support for community tooling in [Cypress](https://docs.cypress.io/guides/component-testing/angular/overview)

* [improving the hydration process](https://angular.io/guide/hydration) for server side rendered applications

Can Angular be exciting? I don't know if that's even the right question to ask.

As more applications are getting older with time, can Angular provide a platform where developers can not only maintain those older applcations but improve them with each new version of Angular? More so, can Angular be the framework of choice for developers building new applications? These seem to be deeper questions that the community is tackling.

The work to improve the framework is continuing with improvements on reactivity, performance and hydration just to name a few areas where work is already in progress.

I left the conference a lot more hopeful and excited to get back to work with my team through the various problems that software development teams face each day.

As much as a lot of grunt work is needed, software delivery teams are people who work in specific group and individual contexts.

The more important lessons from the conference were how to contribute more effectively to the success of my team as we build the various products to meet business needs with the knowledge that the tools out there are meant to help us along the way.

***

[Notes from the conference can be found here](https://github.com/laudebugs/conference-notes/blob/main/conferences/ngconf/ngconf-2023.md)

import { StackBlitzEmbed } from '../../../components/StackBlitzEmbed'

import { SpotifyTrack } from '../../../components/SpotifyTrack'

## Guarding against Unsaved Changes in Angular Apps using Route Guards

We’ve all sometimes mistakingly closed a document editor with a paragraph or two of unsaved edits, or that browser tab where we were drafting an email. Most of the time, when we attempt to navigate away to other parts of these applications, we get a reminder through a dialog box that we have some information that hasn’t yet been synced in the cloud (if the particular app in question even has an autosave feature).

Within Angular applications, we can leverage the `CanDeactivate` interface to implement a route guard which can then be added to the [route’s `canDeactivate` property](https://angular.io/api/router/Route#properties) to notify a user that they have unsaved changes in their app.

### Defining the Component that can deactivate navigation

:::tip

The `CanDeactivate` is an interface that a component has to implement that allows it to “intercept” navigation calls and cancel or proceed with navigation.

:::

The component that checks whether navigation can proceed or be cancelled contains a function, `canDeactivate`, that can be called in the route guard to check whether or not navigation away from the component or page can continue or be cancelled.

An interface can be defined which will be implemented by our component. The function to be called can have one of the following return types:

```tsx

type CanDeactivateFn = (...args: any[]) => Observable | Promise | boolean | UrlTree;

```

In our case, we’ll stick to `boolean | Observable`. Our component will call it’s `canDeactivate` function and return `boolean | Observable` depending on whatever checks the component runs to determine this.

So we have as our interface:

```tsx

// deactivatable-component.interface.ts

export interface DeactivatableComponent {

canDeactivate: () => boolean | Observable

}

```

We can now implement this interface in a component. Assuming we have a form page that would possibly contains unsaved changes, the component has the following functions:

* `containsUnsavedChanges` - a function that returns `true` or `false` checking if the form values have been saved by the user. This implementation could be enhanced to fit any use case — even checking the server for unsaved changes or invalid values and so forth

* `ngAfterViewInit` - this is a life cycle hook that updates the form with the values saved in local storage. These values could be updated from the state and/or the database

* `save` - this function updates the state when the user clicks the “Save” button.

* `canDeactivate` - this is our implementation of the `CanDeactivate` interface that our component implements. This function checks whether changes have been saved when a user tries to navigate away from the page, and if not, opens a dialog to confirm whether the user would like to proceed with or without saving the changes

```tsx

import { AfterContentInit, Component, ViewChild } from '@angular/core'

import { NgForm } from '@angular/forms'

import { MatDialog } from '@angular/material/dialog'

import { tap } from 'rxjs'

import { CoreModule } from '../../core/core.module'

import { DeactivatableComponent } from '../../unsaved-changes.guard'

import { UnsavedChangesDialog } from '../unsaved-changes-dialog.component'

@Component({

standalone: true,

imports: [CoreModule],

templateUrl: './favorites-form.component.html',

styles: [

`

h1{

text-align: center;

}

form{

margin: 1em;

display: grid;

gap: 1em;

}

footer{

display: flex;

justify-content: center;

align-items: center;

flex-direction: column;

}

.unsaved-changes{

margin-bottom: 0.5em;

}

`,

],

})

export FormComponent implements DeactivatableComponent{

/** Our favorites object to save changes */

private favorites = { movie: '', tvShow: '' }

/** Read the form from the Template */

@ViewChild('FavoritesForm', { static: true }) favoritesForm!: NgForm

constructor(private dialog: MatDialog) {}

ngAfterContentInit() {

/** We need to check the next tick since the controls are not registered yet */

setTimeout(() =>

this.favoritesForm.setValue(JSON.parse(window.localStorage?.getItem('favorites')) ?? this.favorites)

)

this.favorites = JSON.parse(window.localStorage?.getItem('favorites')) ?? this.favorites

}

/**

* Checks whether the form contains unsaved changes

*/

containsUnsavedChanges() {

return Object.keys(this.favorites)

.map((key) => this.favoritesForm.value[key] === this.favorites[key])

.some((value) => !value)

}

/**

* Updates the favorites object and saves it to local storage

*/

save() {

this.favorites = { ...this.favoritesForm.value }

window.localStorage.setItem('favorites', JSON.stringify(this.favorites))

}

/**

* If changes are not saved, a dialog is opened to confirm with the user

* that they want to proceed without saving

*/

canDeactivate() {

if (!this.containsUnsavedChanges()) {

return true

} else {

return this.dialog.open(UnsavedChangesDialog).afterClosed()

}

}}

}

```

Here would be the template for this component:

```html

Favourite Movies & TV Shows

```

As one can infer from the form, if the user doesn’t save changes, then a dialog will be opened to confirm whether the user would like to save the changes or not

### Implementing the CanDeactivate guard

There are two ways of writing route guards in Angular. Either a class that implements the `CanDeactivate` interface or a function with [the `CanDeactivateFn` signature.](https://angular.io/api/router/CanDeactivateFn)

1. Implementing the guard as a function

As of Angular 14.2, Functional Route guards were introduced as a way to simplify the writing and wiring up of various types of guards in Angular. You can read more about the updates in [this blog post (Advancements in the Angular Router)](https://blog.angular.io/advancements-in-the-angular-router-5d69ec4c032).

Our implementation would then simply call the components `canDeactivate` function

```tsx

import { CanDeactivateFn } from '@angular/router'

import { Observable } from 'rxjs'

import { DeactivatableComponent } from './deactivatable-component.interface.ts'

/** Our Route Guard as a Function */

export const canDeactivateFormComponent: CanDeactivateFn = (component: DeactivatableComponent) => {

if (component.canDeactivate) {

return component.canDeactivate()

}

return true

}

```

This function can then be passed directly to the routes as:

```tsx

const routes: Routes = [

{

path: 'favorites',

component: FavoritesForm,

canDeactivate: [canDeactivateFormComponent],

},

]

```

2. Implementing the route guard as an injectable class

Route guards can also be implemented as an injectable class. This implementation looks very similar to the functional guard. And so we have:

```tsx

import { CanDeactivate } from '@angular/router'

import { Observable } from 'rxjs'

import { Injectable } from '@angular/core'

/* Our Route Guard as an Injectable Class */

@Injectable({

providedIn: 'root',

})

export class UnsavedChangesGuard implements CanDeactivate {

canDeactivate: CanDeactivateFn = (component: DeactivatableComponent) => {

if (component.canDeactivate) {

return component.canDeactivate()

}

return true

}

}

export interface DeactivatableComponent {

canDeactivate: () => boolean | Observable

}

```

This can then also be added to the `canDeactivate` property for the route as:

```tsx

const routes: Routes = [

{

path: 'favorites',

component: FavoritesForm,

canDeactivate: [canDeactivateFormComponent],

}

]

```

With our guard in place, if we edit the form and try to navigate away without saving, we will be warned through a dialog

You can [preview an example of the app here](https://angular-zzjpgu.stackblitz.io):

### Links and Resources

* [`Route` properties (Angular Docs)](https://angular.io/api/router/Route#properties)

* [`CanDeactivateFn` signature](https://angular.io/api/router/CanDeactivateFn)

* [`CanDeactivate` interface](https://angular.io/api/router/CanDeactivate)

* [Advancements in the Angular Router](https://blog.angular.io/advancements-in-the-angular-router-5d69ec4c032) (Angular Blog)

#### Here's my track of the Week

## Improving Your Git Workflows with Aliases

There are plenty of times when I have found myself looking up git commands that I would like to execute in my day to day whether on my day job or side project work. As such, git provides the ability to configure aliases — short commands that represent longer commands that git executes.

### Configuring Git Aliases

A git alias can be set up by running the command `git config --global alias. `. Where `` represents the command that triggers the ``. A simple example would be setting up a git alias, `git ac` that will take a commit message, stages all files and commit them all in one go. Setting up this command would look like:

```txt

git config --global alias.ac "\!git add -A && git commit -S -m"

```

This command then updates the global `.gitconfig` file, by adding an entry under `[alias]` called `ac` that enables this command to be executed in any git repository:

```txt

[alias]

ac = !git add -A && git commit -S -m

```

After editing some files in a git repository, running `git ac "docs: update notes"` stages all changed files in that repository and commits them using the commit message `"docs: update notes"`.

### A list of Useful Git Aliases

Herein, therefore, is a list of git aliases that drive my daily work.

I’ll group the commands into (a) Commands within a git repository and (b) commands within a **bare** git repository

#### Within a normal git repository

:::tip

These Commands can also be performed within a worktree in a bare git repository

:::

1. `ac` - Pass in a commit message to the git alias. The command stage all files (`git add -A`) and commits with the commit message passed in.

```txt

ac = !git add -A && git commit -S -m

```

2. `add-string` - Among all the changed files in a git repository, Stage only the files where the diff contains a certain substring. Fos instance, if one would want to stage only files that contain the substring `randomFilterFunction`, then, executing the command `git add-string "randomFilterFunction"` would only stage that files where the diff contains the substring `“randomFilterFunction”`.

```txt

add-string = "!sh -c \"git diff -G '$1' --name-only | xargs git add\" -"

```

3. `last-checked` - Within a git repository, find the last `n` checked out branches.

```txt

last-checked = !git reflog | grep -i 'checkout: moving' | head -n

```

This is useful when trying to find out the last few branches that were worked on, especially when switching between branches. For instance, running `git last-checked 5` would return output similar to:

```txt

ae956a5 HEAD@{4}: checkout: moving from main to chore/update-new-env-gh

ae956a5 HEAD@{5}: checkout: moving from chore/update-node-ci to main

ae956a5 HEAD@{7}: checkout: moving from main to chore/update-node-ci

aafa50f HEAD@{9}: checkout: moving from docs/update to main

fba6d0a HEAD@{11}: checkout: moving from fix/github-actions-permissions to docs/update

```

4. `parent` - For a given branch, find the branch from which the current branch was created from. [Source for this git alias command on StackOverflow.](https://stackoverflow.com/questions/3161204/how-to-find-the-nearest-parent-of-a-git-branch)

```txt

parent = "!git show-branch | grep '*' | grep -v \"$(git rev-parse --abbrev-ref HEAD)\" | head -n1 | sed 's/.*\\[\\(.*\\)\\].*/\\1/' | sed 's/[\\^~].*//' #"

```

5. `push-origin` - Sets up the upstream and pushes the local branch to the remote origin. If a branch is created locally, one would have to publish the branch and push to it. This command provides an easy interface for that process from the command line.

```txt

push-origin = !git push --set-upstream origin \"$(git rev-parse --abbrev-ref HEAD)\"

```

6. `uncommit` - This resets the last commit made within a git repository.

```txt

uncommit = reset --soft HEAD~1

```

7. `cb` - An alias for the command: `git checkout -b {branch_name}` - Checks out a new branch

```txt

cb = checkout -b

```

8. `adc` - Stages and commits all the edited files in a git repo

```txt

adc = !git add -A && git commit -S -m

```

#### Working with a bare git repository

:::tip

Working within a git repository provides much needed functionality. However, bare repositories with git worktrees. provide an opportunity to switch in between different pieces of work seamlessly, without having to clone the repository over and over again.

:::

1. `clone-bare` - Pass in a git url and clone into a bare repository.

```txt

clone-bare = !git clone --bare

```

2. `wa` -”worktree add” - Within a bare repository, this git alias takes two commands, the first being the new branch name, the second being the base branch. The new worktree will be located at the path that matches the branch name and based on the base branch.

Note: The Command `git rev-parse --git-common-dir` ensures that worktree paths are always generated from the root of the bare repo

```txt

wa = "!sh -c \"cd $(git rev-parse --git-common-dir) && git worktree add --no-track -b '$1' '$1' '$2'\" -"

```

3. `fetch-some` - Inside a bare repository, fetch and update the local branches of the comma-delimited list of branches. This is useful if one would want to update the local branches with their remote updates.

```txt

fetch-some = "!f() { IFS=','; for b in $1; do git fetch origin $b:$b; done; unset IFS; }; f"

```